Event accumulation

Events are a sparse representation of brightness changes measured by the pixels on a camera sensor. Accumulation is a method applied to events to generate a frame representation of events. The dv-processing library provides a few highly optimized algorithm implementations to perform event accumulation and achieve different frame-like representation of the events.

Definitions

Certain definitions are used in this chapter and within accumulator API. Following is a list of specific definitions and their meaning within context of the accumulator:

Potential - normalized pixel brightness value in some floating point range; it is an internal representation range for brightness that can be scaled into other brightness representations;

Contribution - a numeric value that an event contributes to the brightness of pixel;

Decay - pixel brightness correction applied over time when no events contribute to its brightness;

Neutral potential - a default pixel brightness without any contribution;

Minimum / maximum potential - limits for potential value representation.

Accumulator

The dv::Accumulator is a generalized implementation of a few accumulation algorithms that can be configured

using class methods. Following chapter will describe the configuration options available for the accumulator. Final

chapter of this section will provide a code sample that shows the use of all these configuration options for

accumulation of events from a live camera.

Decay function and decay param

One of None, Linear, Exponential, Step. Defines the data degradation function that should be applied to the image. For each function, the Decay param setting assumes a different function:

Function |

Enum value |

Decay param function |

Explanation |

|---|---|---|---|

None |

|

No function |

Does not apply any decay |

Linear |

|

The slope |

Assume intensity of a pixel is |

Exponential |

|

The time constant |

Assume intensity of a pixel is |

Step |

|

No function |

Set all pixel values to neutral potential after a frame is extracted. |

The decay function can be set using dv::Accumulator::setDecayFunction() method.

Event Contribution

The contribution an event has onto the image. If an event arrives at a position x, y, the pixel value in the frame

at x, y gets increased / decreased by the value of Event contribution, based on the events polarity.

Except:

The resulting pixel value would be higher than Max potential, the value gets set to Max potential instead

The resulting pixel value would be lower than Min potential, the value gets set to Min potential instead

The event polarity is negative, and Ignore polarity is enabled, then the event is counted positively

Event contribution can be set using the dv::Accumulator::setEventContribution() method.

Min potential / Max potential

Sets the minimum and maximum values a pixel can achieve. If the value of the pixel would reach higher or lower, it is

capped at these values. These values are also used for normalization at the output. The frame the module generates is an

unsigned 8-bit grayscale image, normalized between Min potential and Max potential. A pixel with the value Min

potential corresponds to a pixel with the value 0 in the output frame. A pixel with the value Max potential

corresponds to a pixel with the value 255 in the output frame.

Min potential and Max potential can be set using dv::Accumulator::setMinPotential() and

dv::Accumulator::setMaxPotential() methods.

Neutral potential

This setting has different effects depending on the decay function:

Function |

Neutral potential function |

|---|---|

None |

No function. |

Linear |

Pixel brightness value decays linearly into the neutral potential value. |

Exponential |

No function. |

Step |

Each pixel value is set to neutral potential after generating a frame. |

Neutral potential can be set using dv::Accumulator::setNeutralPotential().

Ignore polarity

If this value is set, all events act as if they had positive polarity. In this case, Event contribution is always taken positively. This can be used to generate edge images instead of an actual image reconstruction. This can be done by setting Neutral potential and Min potential to zeros.

This feature can be enabled using the dv::Accumulator::setIgnorePolarity() method.

Synchronous Decay

If this value is set, decay happens in continuous time for all pixels. In every frame, each pixel will be eagerly decayed to the time the image gets generated. If this value is not set, decay at the individual pixel only happens when the pixel receives an event. Decay is lazily evaluated at the pixel.

Note

Both decay regimes yield the same overall decay over time, just the time at which it is applied changes. This parameter does not have an effect for Step decay. Step decay is always synchronous at generation time.

Synchronous decay can be enabled using the dv::Accumulator::setSynchronousDecay() method.

Accumulating event from a camera

The following sample code show how to use dv::Accumulator together with dv::EventStreamSlicer

and dv::io::CameraCapture to implement a pipeline that generates continuous stream of accumulated frames:

1#include <dv-processing/core/frame.hpp>

2#include <dv-processing/io/camera_capture.hpp>

3

4#include <opencv2/highgui.hpp>

5

6int main() {

7 using namespace std::chrono_literals;

8

9 // Open any camera

10 dv::io::CameraCapture capture;

11

12 // Make sure it supports event stream output, throw an error otherwise

13 if (!capture.isEventStreamAvailable()) {

14 throw dv::exceptions::RuntimeError("Input camera does not provide an event stream.");

15 }

16

17 // Initialize an accumulator with some resolution

18 dv::Accumulator accumulator(*capture.getEventResolution());

19

20 // Apply configuration, these values can be modified to taste

21 accumulator.setMinPotential(0.f);

22 accumulator.setMaxPotential(1.f);

23 accumulator.setNeutralPotential(0.5f);

24 accumulator.setEventContribution(0.15f);

25 accumulator.setDecayFunction(dv::Accumulator::Decay::EXPONENTIAL);

26 accumulator.setDecayParam(1e+6);

27 accumulator.setIgnorePolarity(false);

28 accumulator.setSynchronousDecay(false);

29

30 // Initialize a preview window

31 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

32

33 // Initialize a slicer

34 dv::EventStreamSlicer slicer;

35

36 // Register a callback every 33 milliseconds

37 slicer.doEveryTimeInterval(33ms, [&accumulator](const dv::EventStore &events) {

38 // Pass events into the accumulator and generate a preview frame

39 accumulator.accept(events);

40 dv::Frame frame = accumulator.generateFrame();

41

42 // Show the accumulated image

43 cv::imshow("Preview", frame.image);

44 cv::waitKey(2);

45 });

46

47 // Run the event processing while the camera is connected

48 while (capture.isRunning()) {

49 // Receive events, check if anything was received

50 if (const auto events = capture.getNextEventBatch()) {

51 // If so, pass the events into the slicer to handle them

52 slicer.accept(*events);

53 }

54 }

55

56 return 0;

57}

1import dv_processing as dv

2import cv2 as cv

3from datetime import timedelta

4

5# Open any camera

6capture = dv.io.CameraCapture()

7

8# Make sure it supports event stream output, throw an error otherwise

9if not capture.isEventStreamAvailable():

10 raise RuntimeError("Input camera does not provide an event stream.")

11

12# Initialize an accumulator with some resolution

13accumulator = dv.Accumulator(capture.getEventResolution())

14

15# Apply configuration, these values can be modified to taste

16accumulator.setMinPotential(0.0)

17accumulator.setMaxPotential(1.0)

18accumulator.setNeutralPotential(0.5)

19accumulator.setEventContribution(0.15)

20accumulator.setDecayFunction(dv.Accumulator.Decay.EXPONENTIAL)

21accumulator.setDecayParam(1e+6)

22accumulator.setIgnorePolarity(False)

23accumulator.setSynchronousDecay(False)

24

25# Initialize preview window

26cv.namedWindow("Preview", cv.WINDOW_NORMAL)

27

28# Initialize a slicer

29slicer = dv.EventStreamSlicer()

30

31

32# Declare the callback method for slicer

33def slicing_callback(events: dv.EventStore):

34 # Pass events into the accumulator and generate a preview frame

35 accumulator.accept(events)

36 frame = accumulator.generateFrame()

37

38 # Show the accumulated image

39 cv.imshow("Preview", frame.image)

40 cv.waitKey(2)

41

42

43# Register a callback every 33 milliseconds

44slicer.doEveryTimeInterval(timedelta(milliseconds=33), slicing_callback)

45

46# Run the event processing while the camera is connected

47while capture.isRunning():

48 # Receive events

49 events = capture.getNextEventBatch()

50

51 # Check if anything was received

52 if events is not None:

53 # If so, pass the events into the slicer to handle them

54 slicer.accept(events)

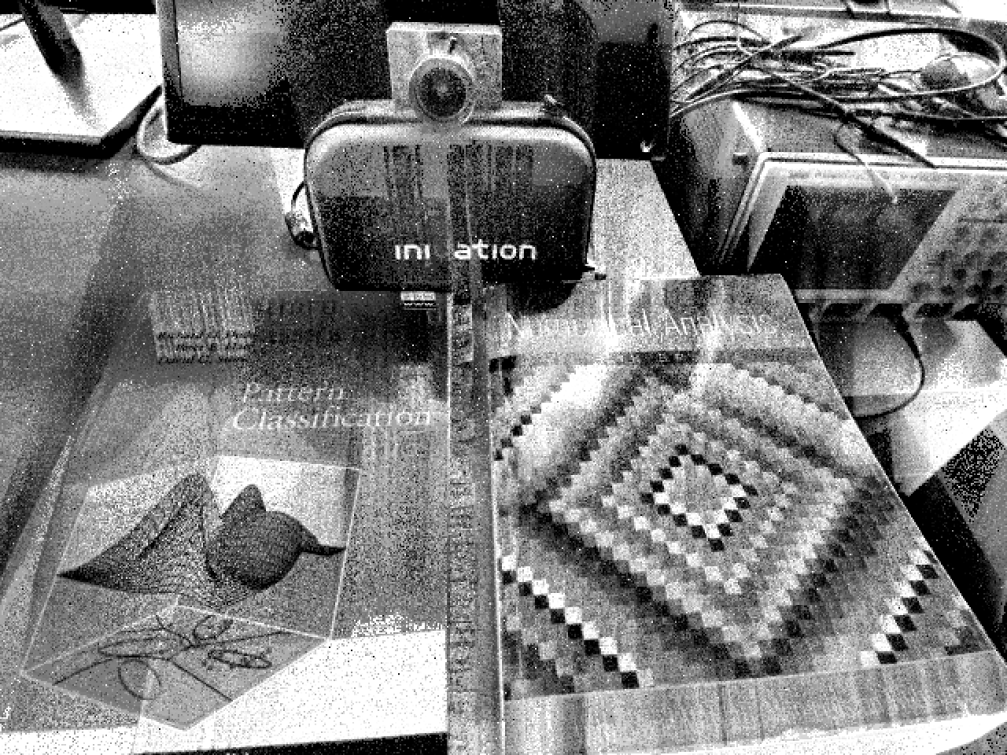

Frame generated using dv::Accumulator class.

Edge accumulation

The dv-processing library provides a highly-optimized variant of accumulator for generating edge maps -

dv::EdgeMapAccumulator. It was specifically optimizes for speed of execution, so it has only a minimal set

of settings and supported features compared to dv::Accumulator.

Below is a table providing available parameters for the dv::EdgeMapAccumulator:

Parameter |

Default value |

Accepted values |

Comment |

|---|---|---|---|

Contribution |

0.25 |

[0.0; 1.0] |

Contribution potential for a single event. |

Ignore polarity |

true |

boolean |

All events are considered positive if enabled. |

Neutral potential |

0.0 |

[0.0; 1.0] |

Neutral potential is the default pixel value when decay is disabled and the value that pixels decay into when decay is enabled. |

Decay param |

1.0 |

[0.0; 1.0] |

This value defines how fast pixel values decay to neutral value. The bigger the value the faster the pixel value will reach neutral value. Decay is applied before each frame generation. The range for decay value is [0.0; 1.0], where 0.0 will not apply any decay and 1.0 will apply maximum decay value resetting a pixel to neutral potential at each generation (default behavior). |

Following sample show the use of dv::EdgeMapAccumulator with dv::EventStreamSlicer and

dv::io::CameraCapture to generate stream of edge images:

1#include <dv-processing/core/frame.hpp>

2#include <dv-processing/io/camera_capture.hpp>

3

4#include <opencv2/highgui.hpp>

5

6int main() {

7 using namespace std::chrono_literals;

8

9 // Open any camera

10 dv::io::CameraCapture capture;

11

12 // Make sure it supports event stream output, throw an error otherwise

13 if (!capture.isEventStreamAvailable()) {

14 throw dv::exceptions::RuntimeError("Input camera does not provide an event stream.");

15 }

16

17 // Initialize an accumulator with some resolution

18 dv::EdgeMapAccumulator accumulator(*capture.getEventResolution());

19

20 // Apply configuration, these values can be modified to taste

21 accumulator.setNeutralPotential(0.0f);

22 accumulator.setEventContribution(0.25f);

23 accumulator.setDecay(1.0);

24 accumulator.setIgnorePolarity(true);

25

26 // Initialize a preview window

27 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

28

29 // Initialize a slicer

30 dv::EventStreamSlicer slicer;

31

32 // Register a callback every 33 milliseconds

33 slicer.doEveryTimeInterval(33ms, [&accumulator](const dv::EventStore &events) {

34 // Pass events into the accumulator and generate a preview frame

35 accumulator.accept(events);

36 dv::Frame frame = accumulator.generateFrame();

37

38 // Show the accumulated image

39 cv::imshow("Preview", frame.image);

40 cv::waitKey(2);

41 });

42

43 // Run the event processing while the camera is connected

44 while (capture.isRunning()) {

45 // Receive events, check if anything was received

46 if (const auto events = capture.getNextEventBatch()) {

47 // If so, pass the events into the slicer to handle them

48 slicer.accept(*events);

49 }

50 }

51

52 return 0;

53}

1import dv_processing as dv

2import cv2 as cv

3from datetime import timedelta

4

5# Open any camera

6capture = dv.io.CameraCapture()

7

8# Make sure it supports event stream output, throw an error otherwise

9if not capture.isEventStreamAvailable():

10 raise RuntimeError("Input camera does not provide an event stream.")

11

12# Initialize an accumulator with some resolution

13accumulator = dv.EdgeMapAccumulator(capture.getEventResolution())

14

15# Apply configuration, these values can be modified to taste

16accumulator.setNeutralPotential(0.0)

17accumulator.setContribution(0.25)

18accumulator.setNeutralPotential(1.0)

19accumulator.setIgnorePolarity(True)

20

21# Initialize a preview window

22cv.namedWindow("Preview", cv.WINDOW_NORMAL)

23

24# Initialize a slicer

25slicer = dv.EventStreamSlicer()

26

27

28# Declare the callback method for slicer

29def slicing_callback(events: dv.EventStore):

30 # Pass events into the accumulator and generate a preview frame

31 accumulator.accept(events)

32 frame = accumulator.generateFrame()

33

34 # Show the accumulated image

35 cv.imshow("Preview", frame.image)

36 cv.waitKey(2)

37

38

39# Register callback to be performed every 33 milliseconds

40slicer.doEveryTimeInterval(timedelta(milliseconds=33), slicing_callback)

41

42# Run the event processing while the camera is connected

43while capture.isRunning():

44 # Receive events

45 events = capture.getNextEventBatch()

46

47 # Check if anything was received

48 if events is not None:

49 # If so, pass the events into the slicer to handle them

50 slicer.accept(events)

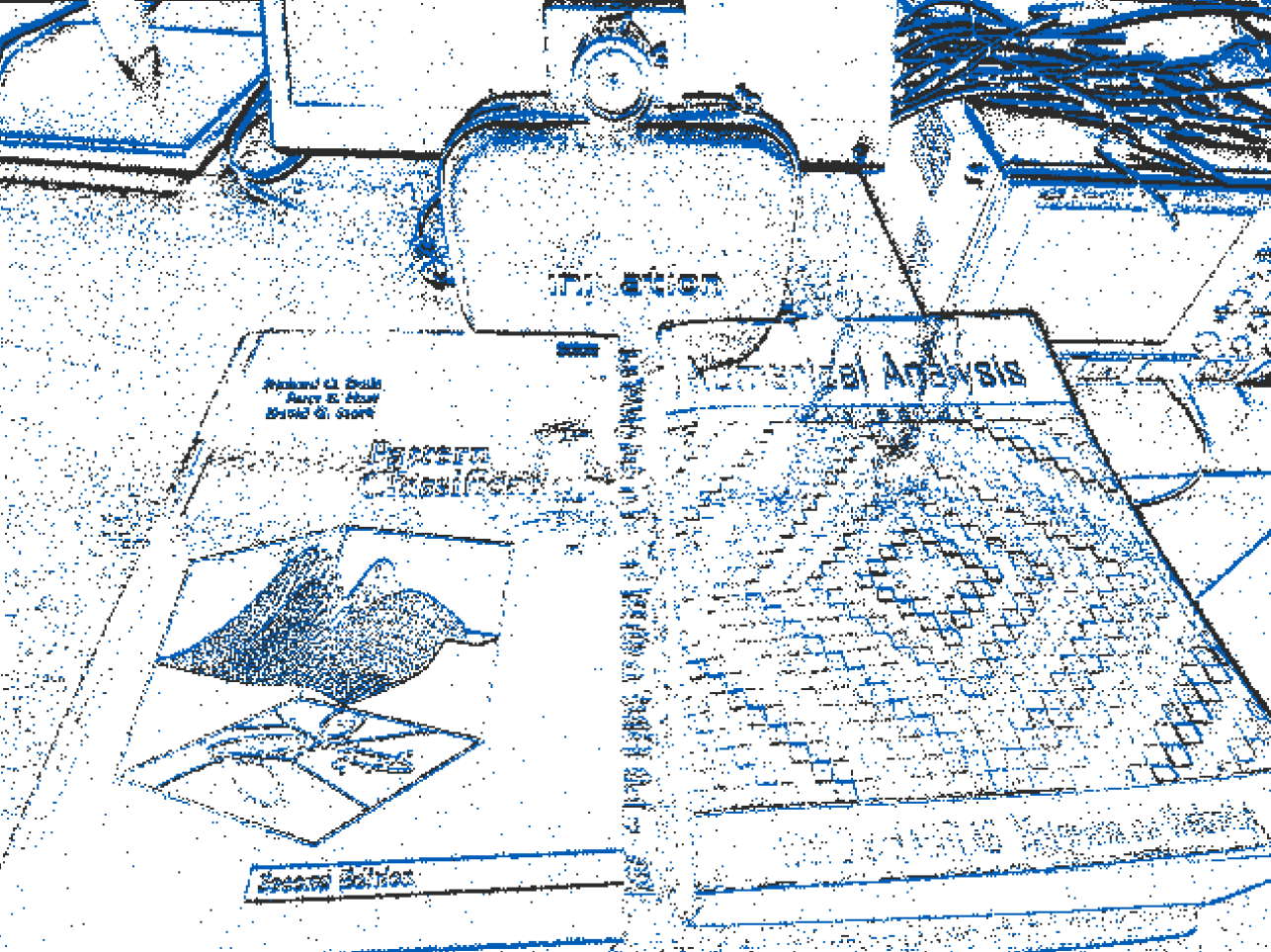

Frame generated using dv::EdgeMapAccumulator class.

Event visualization

Accumulators, described in previous chapters are useful when image or edge representation of events is needed, and they

are mostly useful to process events using typical image processing algorithms.

dv::visualization::EventVisualizer class serves a purpose to perform simple event visualization. Instead of

increasing or decreasing pixel brightness, the dv::visualization::EventVisualizer just performs color

coding of pixel coordinates where an event was registered.

Following sample show the use of dv::visualization::EventVisualizer class to generate colored previews of

events using dv::EventStreamSlicer and dv::io::CameraCapture:

1#include <dv-processing/io/camera_capture.hpp>

2#include <dv-processing/visualization/event_visualizer.hpp>

3

4#include <opencv2/highgui.hpp>

5

6int main() {

7 using namespace std::chrono_literals;

8

9 // Open any camera

10 dv::io::CameraCapture capture;

11

12 // Make sure it supports event stream output, throw an error otherwise

13 if (!capture.isEventStreamAvailable()) {

14 throw dv::exceptions::RuntimeError("Input camera does not provide an event stream.");

15 }

16

17 // Initialize an accumulator with some resolution

18 dv::visualization::EventVisualizer visualizer(*capture.getEventResolution());

19

20 // Apply color scheme configuration, these values can be modified to taste

21 visualizer.setBackgroundColor(dv::visualization::colors::white);

22 visualizer.setPositiveColor(dv::visualization::colors::iniBlue);

23 visualizer.setNegativeColor(dv::visualization::colors::darkGrey);

24

25 // Initialize a preview window

26 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

27

28 // Initialize a slicer

29 dv::EventStreamSlicer slicer;

30

31 // Register a callback every 33 milliseconds

32 slicer.doEveryTimeInterval(33ms, [&visualizer](const dv::EventStore &events) {

33 // Generate a preview frame

34 cv::Mat image = visualizer.generateImage(events);

35

36 // Show the accumulated image

37 cv::imshow("Preview", image);

38 cv::waitKey(2);

39 });

40

41 // Run the event processing while the camera is connected

42 while (capture.isRunning()) {

43 // Receive events, check if anything was received

44 if (const auto events = capture.getNextEventBatch()) {

45 // If so, pass the events into the slicer to handle them

46 slicer.accept(*events);

47 }

48 }

49

50 return 0;

51}

1import dv_processing as dv

2import cv2 as cv

3from datetime import timedelta

4

5# Open any camera

6capture = dv.io.CameraCapture()

7

8# Make sure it supports event stream output, throw an error otherwise

9if not capture.isEventStreamAvailable():

10 raise RuntimeError("Input camera does not provide an event stream.")

11

12# Initialize an accumulator with some resolution

13visualizer = dv.visualization.EventVisualizer(capture.getEventResolution())

14

15# Apply color scheme configuration, these values can be modified to taste

16visualizer.setBackgroundColor(dv.visualization.colors.white())

17visualizer.setPositiveColor(dv.visualization.colors.iniBlue())

18visualizer.setNegativeColor(dv.visualization.colors.darkGrey())

19

20# Initialize a preview window

21cv.namedWindow("Preview", cv.WINDOW_NORMAL)

22

23# Initialize a slicer

24slicer = dv.EventStreamSlicer()

25

26

27# Declare the callback method for slicer

28def slicing_callback(events: dv.EventStore):

29 # Generate a preview frame

30 frame = visualizer.generateImage(events)

31

32 # Show the accumulated image

33 cv.imshow("Preview", frame)

34 cv.waitKey(2)

35

36

37# Register callback to be performed every 33 milliseconds

38slicer.doEveryTimeInterval(timedelta(milliseconds=33), slicing_callback)

39

40# Run the event processing while the camera is connected

41while capture.isRunning():

42 # Receive events

43 events = capture.getNextEventBatch()

44

45 # Check if anything was received

46 if events is not None:

47 # If so, pass the events into the slicer to handle them

48 slicer.accept(events)

Frame generated using dv::visualization::EventVisualizer class.

Time surface

Time surface is an event representation in an image frame, except instead of representing event in pixel brightness, it represents event in a 2D image structure, but pixel value contains the latest event timestamp.

The timestamps representations can be normalized to retrieve an image representation of the time surface. It will represent the latest timestamps with the brightest pixel values.

Following sample show the use of dv::TimeSurface class to generate time surface previews of events using

dv::EventStreamSlicer and dv::io::CameraCapture:

1#include <dv-processing/core/frame.hpp>

2#include <dv-processing/io/camera_capture.hpp>

3

4#include <opencv2/highgui.hpp>

5

6int main() {

7 using namespace std::chrono_literals;

8

9 // Open any camera

10 dv::io::CameraCapture capture;

11

12 // Make sure it supports event stream output, throw an error otherwise

13 if (!capture.isEventStreamAvailable()) {

14 throw dv::exceptions::RuntimeError("Input camera does not provide an event stream.");

15 }

16

17 // Initialize an accumulator with camera sensor resolution

18 dv::TimeSurface surface(*capture.getEventResolution());

19

20 // Initialize a preview window

21 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

22

23 // Initialize a slicer

24 dv::EventStreamSlicer slicer;

25

26 // Register a callback every 33 milliseconds

27 slicer.doEveryTimeInterval(33ms, [&surface](const dv::EventStore &events) {

28 // Pass the events to update the time surface

29 surface.accept(events);

30

31 // Generate a preview frame

32 dv::Frame frame = surface.generateFrame();

33

34 // Show the accumulated image

35 cv::imshow("Preview", frame.image);

36 cv::waitKey(2);

37 });

38

39 // Run the event processing while the camera is connected

40 while (capture.isRunning()) {

41 // Receive events, check if anything was received

42 if (const auto events = capture.getNextEventBatch()) {

43 // If so, pass the events into the slicer to handle them

44 slicer.accept(*events);

45 }

46 }

47

48 return 0;

49}

1import dv_processing as dv

2import cv2 as cv

3from datetime import timedelta

4

5# Open any camera

6capture = dv.io.CameraCapture()

7

8# Make sure it supports event stream output, throw an error otherwise

9if not capture.isEventStreamAvailable():

10 raise RuntimeError("Input camera does not provide an event stream.")

11

12# Initialize an accumulator with camera sensor resolution

13surface = dv.TimeSurface(capture.getEventResolution())

14

15# Initialize a preview window

16cv.namedWindow("Preview", cv.WINDOW_NORMAL)

17

18# Initialize a slicer

19slicer = dv.EventStreamSlicer()

20

21

22# Declare the callback method for slicer

23def slicing_callback(events: dv.EventStore):

24 # Pass the events to update the time surface

25 surface.accept(events)

26

27 # Generate a preview frame

28 frame = surface.generateFrame()

29

30 # Show the accumulated image

31 cv.imshow("Preview", frame.image)

32 cv.waitKey(2)

33

34

35# Register callback to be performed every 33 milliseconds

36slicer.doEveryTimeInterval(timedelta(milliseconds=33), slicing_callback)

37

38# Run the event processing while the camera is connected

39while capture.isRunning():

40 # Receive events

41 events = capture.getNextEventBatch()

42

43 # Check if anything was received

44 if events is not None:

45 # If so, pass the events into the slicer to handle them

46 slicer.accept(events)

Frame generated using dv::TimeSurface class.

Speed invariant time surface

Speed invariant time surface is a specific time surface variant that is more suitable for feature extraction, the implementation follows this paper: https://arxiv.org/pdf/1903.11332.pdf.

Following sample show the use of dv::SpeedInvariantTimeSurface class to generate time surface previews of

events using dv::EventStreamSlicer and dv::io::CameraCapture:

1#include <dv-processing/io/camera_capture.hpp>

2

3#include <opencv2/highgui.hpp>

4

5int main() {

6 using namespace std::chrono_literals;

7

8 // Open any camera

9 dv::io::CameraCapture capture;

10

11 // Make sure it supports event stream output, throw an error otherwise

12 if (!capture.isEventStreamAvailable()) {

13 throw dv::exceptions::RuntimeError("Input camera does not provide an event stream.");

14 }

15

16 // Initialize an accumulator with camera sensor resolution

17 dv::SpeedInvariantTimeSurface surface(*capture.getEventResolution());

18

19 // Initialize a preview window

20 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

21

22 // Initialize a slicer

23 dv::EventStreamSlicer slicer;

24

25 // Register a callback every 33 milliseconds

26 slicer.doEveryTimeInterval(33ms, [&surface](const dv::EventStore &events) {

27 // Pass the events to update the time surface

28 surface.accept(events);

29

30 // Generate a preview frame

31 dv::Frame frame = surface.generateFrame();

32

33 // Show the accumulated image

34 cv::imshow("Preview", frame.image);

35 cv::waitKey(2);

36 });

37

38 // Run the event processing while the camera is connected

39 while (capture.isRunning()) {

40 // Receive events, check if anything was received

41 if (const auto events = capture.getNextEventBatch()) {

42 // If so, pass the events into the slicer to handle them

43 slicer.accept(*events);

44 }

45 }

46

47 return 0;

48}

1import dv_processing as dv

2import cv2 as cv

3from datetime import timedelta

4

5# Open any camera

6capture = dv.io.CameraCapture()

7

8# Make sure it supports event stream output, throw an error otherwise

9if not capture.isEventStreamAvailable():

10 raise RuntimeError("Input camera does not provide an event stream.")

11

12# Initialize an accumulator with camera sensor resolution

13surface = dv.SpeedInvariantTimeSurface(capture.getEventResolution())

14

15# Initialize a preview window

16cv.namedWindow("Preview", cv.WINDOW_NORMAL)

17

18# Initialize a slicer

19slicer = dv.EventStreamSlicer()

20

21

22# Declare the callback method for slicer

23def slicing_callback(events: dv.EventStore):

24 # Pass the events to update the time surface

25 surface.accept(events)

26

27 # Generate a preview frame

28 frame = surface.generateFrame()

29

30 # Show the accumulated image

31 cv.imshow("Preview", frame.image)

32 cv.waitKey(2)

33

34

35# Register callback to be performed every 33 milliseconds

36slicer.doEveryTimeInterval(timedelta(milliseconds=33), slicing_callback)

37

38# Run the event processing while the camera is connected

39while capture.isRunning():

40 # Receive events

41 events = capture.getNextEventBatch()

42

43 # Check if anything was received

44 if events is not None:

45 # If so, pass the events into the slicer to handle them

46 slicer.accept(events)

Frame generated using dv::SpeedInvariantTimeSurface class.

Performance of available accumulators

The library performs benchmarking of available accumulation algorithms to ensure their best performance. Accumulators are also benchmarked on two metrics:

Event throughput - measured in millions of (mega) events per second;

Framerate - measured in generated frames per second.

The benchmarks are performed by generating a batch of events at uniformly random pixel coordinates on a VGA (640x480) resolution. Below are the results of running the benchmark on AMD Ryzen 7 3800X 8-Core Processor:

Accumulator type |

Framerate (FPS) |

Throughput (MegaEvent/s) |

|---|---|---|

Accumulator |

668 |

66.5 |

EdgeMapAccumulator |

1767 |

149.1 |

EventVisualizer |

785 |

78.3 |

TimeSurface |

910 |

91.5 |

SpeedInvariantTimeSurface |

370 |

36.8 |