Feature detection in events

The dv-processing library provides extensible interfaces and implementations for feature detection on event streams. Features are certain locations of interest in the data that can be detected with repeatability, such as corners. The detected features can be tracked over time in the event stream, so the library provides a reusable interface for feature detection algorithm implementations that can be used with a tracking algorithm.

Feature detectors

This section describes available feature detectors and their basic usage.

Feature detector using OpenCV

The most basic use of a feature detector is to use OpenCV feature detector implementation with the dv-processing feature

detector wrapper on a dv::Frame. The feature detection wrapper base class

dv::features::FeatureDetector was designed to wrap a feature detection algorithm and provide a few

additional processing steps to handle image margins (ignore feature that are very close to edge of the image) and

subsampling as a post-detection step (sampling only an optimal set of features).

Margins are set by a floating-point coefficient value, which expresses a relative margin size from the resolution of an input image, e.g. 0.05 would set the margins of 5% of the total width / height of the image.

Only a select number of features are useful for most applications. The dv::features::FeatureDetector

simplifies feature subsampling by providing a post-processing step that sub-samples the output features. The

sub-sampling can be performed by enabling one the following post-processing options:

None: Do not perform any post-processing, all features from detection will be returned.TopN: Retrieve a given number of the highest scoring features.AdaptiveNMS: Apply the AdaptiveNMS algorithm to retrieve equally spaced features in pixel space dimensions. More information on the AdaptiveNMS here: original code and paper .

The following code sample shows how to detect the “good features to track” from OpenCV on an accumulated image from a live camera:

1#include <dv-processing/core/frame.hpp>

2#include <dv-processing/features/feature_detector.hpp>

3#include <dv-processing/io/camera_capture.hpp>

4

5#include <opencv2/features2d.hpp>

6#include <opencv2/highgui.hpp>

7

8int main() {

9 using namespace std::chrono_literals;

10

11 // Open any camera

12 dv::io::CameraCapture capture;

13

14 // Make sure it supports event stream output, throw an error otherwise

15 if (!capture.isEventStreamAvailable()) {

16 throw dv::exceptions::RuntimeError("Input camera does not provide an event stream.");

17 }

18

19 // Initialize an accumulator with camera resolution

20 dv::Accumulator accumulator(*capture.getEventResolution());

21

22 // Initialize a preview window

23 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

24

25 // Let's detect 100 features

26 const size_t numberOfFeatures = 100;

27

28 // Create an image feature detector with given resolution. By default, it uses FAST feature detector

29 // with AdaptiveNMS post-processing.

30 auto detector = dv::features::ImageFeatureDetector(*capture.getEventResolution(), cv::GFTTDetector::create());

31

32 // Initialize a slicer

33 dv::EventStreamSlicer slicer;

34

35 // Register a callback every 33 milliseconds

36 slicer.doEveryTimeInterval(33ms, [&accumulator, &detector](const dv::EventStore &events) {

37 // Pass events into the accumulator and generate a preview frame

38 accumulator.accept(events);

39 dv::Frame frame = accumulator.generateFrame();

40

41 // Run the feature detection on the accumulated frame

42 const auto features = detector.runDetection(frame, numberOfFeatures);

43

44 // Create a colored preview image by converting from grayscale to BGR

45 cv::Mat preview;

46 cv::cvtColor(frame.image, preview, cv::COLOR_GRAY2BGR);

47

48 // Draw detected features

49 cv::drawKeypoints(preview, dv::data::fromTimedKeyPoints(features), preview);

50

51 // Show the accumulated image

52 cv::imshow("Preview", preview);

53 cv::waitKey(2);

54 });

55

56 // Run the event processing while the camera is connected

57 while (capture.isRunning()) {

58 // Receive events, check if anything was received

59 if (const auto events = capture.getNextEventBatch()) {

60 // If so, pass the events into the slicer to handle them

61 slicer.accept(*events);

62 }

63 }

64

65 return 0;

66}

1import dv_processing as dv

2import cv2 as cv

3from datetime import timedelta

4

5# Open any camera

6capture = dv.io.CameraCapture()

7

8# Make sure it supports event stream output, throw an error otherwise

9if not capture.isEventStreamAvailable():

10 raise RuntimeError("Input camera does not provide an event stream.")

11

12# Initialize an accumulator with some resolution

13accumulator = dv.Accumulator(capture.getEventResolution())

14

15# Initialize preview window

16cv.namedWindow("Preview", cv.WINDOW_NORMAL)

17

18# Let's detect 100 features

19number_of_features = 100

20

21# Create an image feature detector with given resolution. By default, it uses FAST feature detector

22# with AdaptiveNMS post-processing.

23detector = dv.features.ImageFeatureDetector(capture.getEventResolution())

24

25# Initialize a slicer

26slicer = dv.EventStreamSlicer()

27

28

29# Declare the callback method for slicer

30def slicing_callback(events: dv.EventStore):

31 # Pass events into the accumulator and generate a preview frame

32 accumulator.accept(events)

33 frame = accumulator.generateFrame()

34

35 # Run the feature detection on the accumulated frame

36 features = detector.runDetection(frame, number_of_features)

37

38 # Create a colored preview image by converting from grayscale to BGR

39 preview = cv.cvtColor(frame.image, cv.COLOR_GRAY2BGR)

40 for feature in features:

41 # Draw a rectangle marker on each feature location

42 cv.drawMarker(preview, (int(feature.pt[0]), int(feature.pt[1])),

43 dv.visualization.colors.someNeonColor(feature.class_id), cv.MARKER_SQUARE, 10, 2)

44

45 # Show the accumulated image

46 cv.imshow("Preview", preview)

47 cv.waitKey(2)

48

49

50# Register a callback every 33 milliseconds

51slicer.doEveryTimeInterval(timedelta(milliseconds=33), slicing_callback)

52

53# Run the event processing while the camera is connected

54while capture.isRunning():

55 # Receive events

56 events = capture.getNextEventBatch()

57

58 # Check if anything was received

59 if events is not None:

60 # If so, pass the events into the slicer to handle them

61 slicer.accept(events)

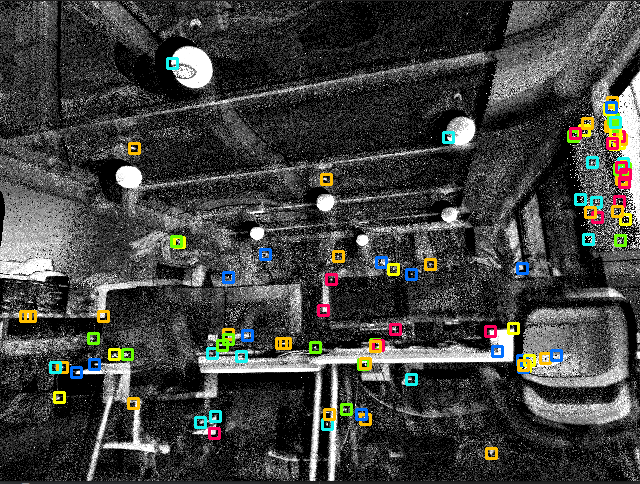

Detected “good features to track” on an accumulated image.

Arc* Feature detector

The library provides an implementation of Arc* feature detector. Arc* performs corner detection on a per-event basis,

so it uses dv::EventStore as an input. More details on this feature detection algorithm can be found in the

original paper publication

.

1#include <dv-processing/core/frame.hpp>

2#include <dv-processing/features/arc_corner_detector.hpp>

3#include <dv-processing/features/feature_detector.hpp>

4#include <dv-processing/io/camera_capture.hpp>

5#include <dv-processing/visualization/colors.hpp>

6

7#include <opencv2/features2d.hpp>

8#include <opencv2/highgui.hpp>

9

10int main() {

11 using namespace std::chrono_literals;

12

13 // Open any camera

14 dv::io::CameraCapture capture;

15

16 // Make sure it supports event stream output, throw an error otherwise

17 if (!capture.isEventStreamAvailable()) {

18 throw dv::exceptions::RuntimeError("Input camera does not provide an event stream.");

19 }

20

21 const auto resolution = *capture.getEventResolution();

22

23 // Initialize an accumulator with camera resolution

24 dv::Accumulator accumulator(resolution);

25

26 // Initialize a preview window

27 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

28

29 // Let's detect 100 features

30 const size_t numberOfFeatures = 100;

31

32 // Create an Arc* feature detector with given resolution. Corner range is set to 5 millisecond.

33 auto detector = dv::features::FeatureDetector<dv::EventStore, dv::features::ArcCornerDetector<>>(

34 resolution, std::make_shared<dv::features::ArcCornerDetector<>>(resolution, 5000, false));

35

36 // Initialize a slicer

37 dv::EventStreamSlicer slicer;

38

39 // Register a callback every 33 milliseconds

40 slicer.doEveryTimeInterval(33ms, [&accumulator, &detector](const dv::EventStore &events) {

41 // Run the feature detection on the incoming events. Features are extracted on a per-event basis

42 const auto features = detector.runDetection(events, numberOfFeatures);

43

44 // Run accumulator for an image, it is going to be used only for preview

45 accumulator.accept(events);

46 const dv::Frame frame = accumulator.generateFrame();

47

48 // Create a colored preview image by converting from grayscale to BGR

49 cv::Mat preview;

50 cv::cvtColor(frame.image, preview, cv::COLOR_GRAY2BGR);

51

52 // Draw detected features

53 cv::drawKeypoints(preview, dv::data::fromTimedKeyPoints(features), preview);

54

55 // Show the accumulated image

56 cv::imshow("Preview", preview);

57 cv::waitKey(2);

58 });

59

60 // Run the event processing while the camera is connected

61 while (capture.isRunning()) {

62 // Receive events, check if anything was received

63 if (const auto events = capture.getNextEventBatch()) {

64 // If so, pass the events into the slicer to handle them

65 slicer.accept(*events);

66 }

67 }

68

69 return 0;

70}

1import dv_processing as dv

2import cv2 as cv

3from datetime import timedelta

4

5# Open any camera

6capture = dv.io.CameraCapture()

7

8# Make sure it supports event stream output, throw an error otherwise

9if not capture.isEventStreamAvailable():

10 raise RuntimeError("Input camera does not provide an event stream.")

11

12# Initialize an accumulator with some resolution

13accumulator = dv.Accumulator(capture.getEventResolution())

14

15# Initialize preview window

16cv.namedWindow("Preview", cv.WINDOW_NORMAL)

17

18# Let's detect 100 features

19number_of_features = 100

20

21# Create an Arc* feature detector with given resolution. Corner range is set to 5 millisecond.

22detector = dv.features.ArcEventFeatureDetector(capture.getEventResolution(), 5000, False)

23

24# Initialize a slicer

25slicer = dv.EventStreamSlicer()

26

27

28# Declare the callback method for slicer

29def slicing_callback(events: dv.EventStore):

30 # Run the feature detection on the incoming events

31 features = detector.runDetection(events, number_of_features)

32

33 # Pass events into the accumulator and generate a preview frame

34 accumulator.accept(events)

35 frame = accumulator.generateFrame()

36

37 # Create a colored preview image by converting from grayscale to BGR

38 preview = cv.cvtColor(frame.image, cv.COLOR_GRAY2BGR)

39 for feature in features:

40 # Draw a rectangle marker on each feature location

41 cv.drawMarker(preview, (int(feature.pt[0]), int(feature.pt[1])),

42 dv.visualization.colors.someNeonColor(feature.class_id), cv.MARKER_SQUARE, 10, 2)

43

44 # Show the accumulated image

45 cv.imshow("Preview", preview)

46 cv.waitKey(2)

47

48

49# Register a callback every 33 milliseconds

50slicer.doEveryTimeInterval(timedelta(milliseconds=33), slicing_callback)

51

52# Run the event processing while the camera is connected

53while capture.isRunning():

54 # Receive events

55 events = capture.getNextEventBatch()

56

57 # Check if anything was received

58 if events is not None:

59 # If so, pass the events into the slicer to handle them

60 slicer.accept(events)

Note

The provided implementation is not suitable for running real-time with high event rates.

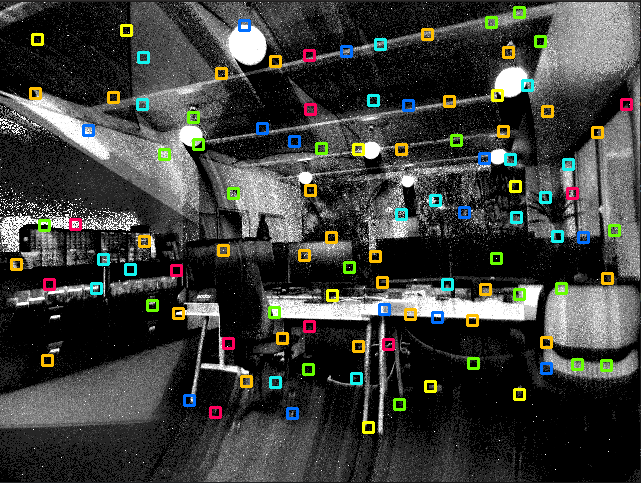

Detected Arc* features displayed on an accumulated image.

Event-based blob detector

The library provides an implementation of a simple event-based blob detector. The class makes use of

cv::SimpleBlobDetector to detect blobs on an image. The image is generated using dv::EdgeMapAccumulator. A

default cv::SimpleBlobDetector is provided with reasonable values to safely detect blobs and not noise in the

accumulated image. It is also possible to specify the detector that should be used to find the blobs and some specific

region of interests in the image plane where the blobs should be searched. In addition to this, eventually, it is also

possible to specify the down sampling factor to be applied to the image before performing the detection and also specify

any additional pre-processing step that should be applied before running the actual detection step. In summary, the

detection steps are as following:

Compute accumulated image from events

Apply ROI and mask to the accumulated event image

Down sample image (optional)

Apply pre-process function (optional)

Detect blobs

Rescale blobs to original resolution (if down sampling was performed)

1#include <dv-processing/features/event_blob_detector.hpp>

2#include <dv-processing/features/feature_detector.hpp>

3#include <dv-processing/io/camera_capture.hpp>

4#include <dv-processing/visualization/colors.hpp>

5

6#include <opencv2/features2d.hpp>

7#include <opencv2/highgui.hpp>

8

9int main() {

10 using namespace std::chrono_literals;

11

12 // Open any camera

13 dv::io::CameraCapture capture;

14

15 // Make sure it supports event stream output, throw an error otherwise

16 if (!capture.isEventStreamAvailable()) {

17 throw dv::exceptions::RuntimeError("Input camera does not provide an event stream.");

18 }

19

20 const auto resolution = *capture.getEventResolution();

21

22 // Initialize an accumulator with camera resolution (for visualization only)

23 dv::Accumulator accumulator(resolution);

24

25 // Initialize a preview window

26 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

27

28 // Let's detect up to 100 features

29 const size_t numberOfFeatures = 100;

30

31 // define preprocessing step (here we do nothing but show how it can be implemented)

32 std::function<void(cv::Mat &)> preprocess = [](cv::Mat &image) {

33 };

34

35 // Define number of pyr-down to be applied to the image before detecting blobs. If pyramidLevel is set to zero, the

36 // blobs will be detected on the original image resolution, if pyramid level is one, the image will be down sampled

37 // by a factor of 2 (factor of 4 if pyramid level is 2 and so on...) before performing the detection. The blobs will

38 // then be scaled back to the original resolution size.

39 const int32_t pyramidLevel = 0;

40 // Create an event-based blob detector

41 const auto blobDetector = std::make_shared<dv::features::EventBlobDetector>(resolution, pyramidLevel, preprocess);

42 auto detector = dv::features::EventFeatureBlobDetector(resolution, blobDetector);

43

44 // Initialize a slicer

45 dv::EventStreamSlicer slicer;

46

47 // Register a callback every 33 milliseconds

48 slicer.doEveryTimeInterval(33ms, [&accumulator, &detector](const dv::EventStore &events) {

49 // Run the feature detection on the incoming events.

50 const auto features = detector.runDetection(events, numberOfFeatures);

51

52 // Run accumulator for an image, it is going to be used only for preview

53 accumulator.accept(events);

54 const dv::Frame frame = accumulator.generateFrame();

55

56 // Create a colored preview image by converting from grayscale to BGR

57 cv::Mat preview;

58 cv::cvtColor(frame.image, preview, cv::COLOR_GRAY2BGR);

59

60 // Draw detected features

61 cv::drawKeypoints(preview, dv::data::fromTimedKeyPoints(features), preview);

62

63 // Show the accumulated image

64 cv::imshow("Preview", preview);

65 cv::waitKey(2);

66 });

67

68 // Run the event processing while the camera is connected

69 while (capture.isRunning()) {

70 // Receive events, check if anything was received

71 if (const auto events = capture.getNextEventBatch()) {

72 // If so, pass the events into the slicer to handle them

73 slicer.accept(*events);

74 }

75 }

76

77 return 0;

78}

1import dv_processing as dv

2import cv2 as cv

3from datetime import timedelta

4

5

6def preprocess(image):

7 # add your pre precessing here..

8 return image

9

10

11# Open any camera

12capture = dv.io.CameraCapture()

13

14# Make sure it supports event stream output, throw an error otherwise

15if not capture.isEventStreamAvailable():

16 raise RuntimeError("Input camera does not provide an event stream.")

17

18# Initialize an accumulator with some resolution (for visualization only)

19accumulator = dv.Accumulator(capture.getEventResolution())

20

21# Initialize preview window

22cv.namedWindow("Preview", cv.WINDOW_NORMAL)

23

24# Let's detect 100 features

25number_of_features = 100

26

27# Define number of pyr-down to be applied to the image before detecting blobs. If pyramidLevel is set to zero, the

28# blobs will be detected on the original image resolution, if pyramid level is one, the image will be down sampled by a

29# factor of 2 (factor of 4 if pyramid level is 2 and so on..) before perfirming the detection. The blobs will then be

30# scaled back to the original resolution size.

31pyramidLevel = 0

32

33# Create an event-based blob detector

34detector = dv.features.EventFeatureBlobDetector(capture.getEventResolution(), pyramidLevel, preprocess)

35

36# Initialize a slicer

37slicer = dv.EventStreamSlicer()

38

39

40# Declare the callback method for slicer

41def slicing_callback(events: dv.EventStore):

42 # Run the feature detection on the incoming events

43 features = detector.runDetection(events, number_of_features)

44

45 # Pass events into the accumulator and generate a preview frame

46 accumulator.accept(events)

47 frame = accumulator.generateFrame()

48

49 # Create a colored preview image by converting from grayscale to BGR

50 preview = cv.cvtColor(frame.image, cv.COLOR_GRAY2BGR)

51 for feature in features:

52 # Draw a rectangle marker on each feature location

53 cv.drawMarker(preview, (int(feature.pt[0]), int(feature.pt[1])),

54 dv.visualization.colors.someNeonColor(feature.class_id), cv.MARKER_SQUARE, 10, 2)

55

56 # Show the accumulated image

57 cv.imshow("Preview", preview)

58 cv.waitKey(2)

59

60

61# Register a callback every 33 milliseconds

62slicer.doEveryTimeInterval(timedelta(milliseconds=33), slicing_callback)

63

64# Run the event processing while the camera is connected

65while capture.isRunning():

66 # Receive events

67 events = capture.getNextEventBatch()

68

69 # Check if anything was received

70 if events is not None:

71 # If so, pass the events into the slicer to handle them

72 slicer.accept(events)