Welcome to dv-processing’s documentation!

Generic processing algorithms for event cameras.

Introduction

This documentation describes the API and the algorithms available in the dv-processing library. The documentation

covers the basic usage of the library for event camera data processing. The library builds on top of C++20 coding

standard, provides state-of-the-art algorithms to process event data streams from iniVation cameras with high

efficiency. The library also provides Python bindings that allows users to develop high performance event processing

application in Python as well. Since the library is built on top of modern C++, it extensively uses template

metaprogramming to provide extensible and performant implementations of event processing algorithms. Python bindings

have some limitations due to the of use templates, but most applications can be developed using Python alone. Extensive

code samples in both C++ and Python are provided next to algorithm description, the samples also follow modern coding

style conventions. More information about the coding style convention can be found

here. Code samples apply the

constant and immutability rules

from the previously mentioned document.

Getting started

This section covers installation and usage of the library in Linux , Windows , and MacOS . Usage in CMake projects is covered for the C++ API, and pip installation is preferred for Python projects.

Basics

This section covers the very basic features of this library:

Storing events in memory and efficiently accessing them;

Frame image accumulation algorithms from events;

Noise filtering in events and efficient subsampling;

Input/Output of event data: live camera access, as well as reading / writing data from a file.

Frame generated using dv::EdgeMapAccumulator class.

Vision algorithms

This chapter describes the available event processing algorithms that can are building blocks for computer vision with event cameras. Most notable algorithms and features:

Camera geometry - sensor calibration, pixel projections, lens undistortion operations;

Feature detection and tracking

Minimal kinematics routines

Mean-shift clustering

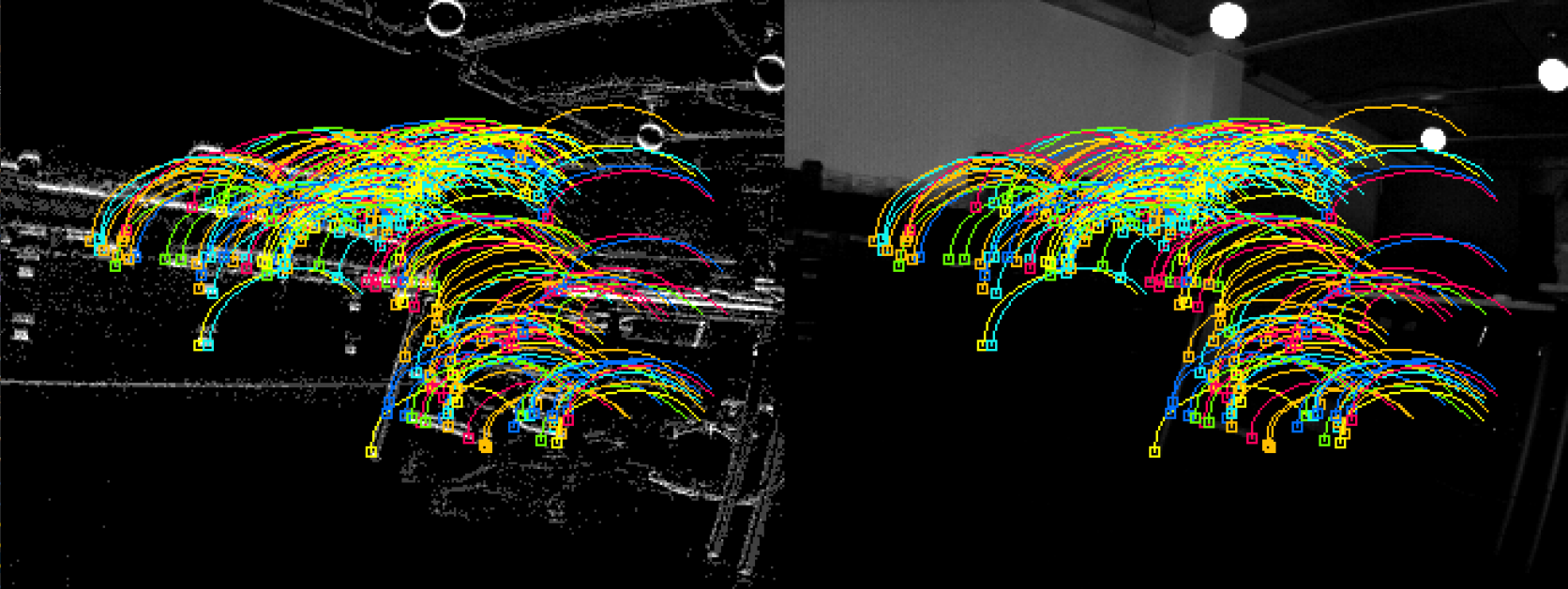

Tracked features on frame and event streams from a camera.

Advanced applications

This section describes the use of available library features and algorithms to build more complex applications and algorithms for: depth estimation, motion compensation and contrast maximization.

Expected result of semi-dense disparity estimation. The output provides two accumulated frames and color-coded disparity map.

API

API documentation provides references for available classes and methods in the library. You can find detailed documentation on each method for their use case, function arguments, and produced outputs.

Help

In case of technical issues or any problems, please visit the support page. Any issues in the documentation or the code can be reported to our gitlab issue tracker.