Kinematics primitives

The dv-processing library provides a minimal implementation of kinematic transformation primitives that are useful for geometric computer vision algorithms. This includes transformations of 3D points using transformation matrices and handling rigid-body motion trajectories over time. These basic primitives can be used to implement motion compensation algorithm for event data, which can be used to reduce or eliminate motion blur in the event stream. The underlying implementation uses mathematical operations from Eigen library, so the mathematical operations are expected to be highly efficient.

Transformation

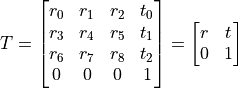

A transformation in dv-processing describes an object’s orientation and position in 3D space at a certain point in time. Transformation contains a timestamp, rotational and translational transformation expressed as a 4x4 homogenous transformation matrix T, like this:

Here:

- is a rotation matrix (and it’s coefficients) that describes an object’s rotation.

- is a rotation matrix (and it’s coefficients) that describes an object’s rotation. - is a vector describing translational vector, which is an object’s displacement.

- is a vector describing translational vector, which is an object’s displacement.

A transformation matrix together with a timestamp describes complete attitude with 6 degrees of freedom. This

transformation can be applied to other transformations as well as 3D points to obtain a new relative position with the

applied transformation. The transformation is implemented in the dv::kinematics::Transformation class,

which is a templated class. The template parameter sets the underlying matrix scalar data type for the 4x4 matrix, which

is either float or double. To simplify the use case, two predefined aliases are defined:

dv::kinematics::Transformationf and dv::kinematics::Transformationd - they differ in the

underlying scalar data type:

Transformationfuses 32-bit single precision floating point values,Transformationduses 64-bit double precision floating point values.

The library usually prefers the use of single precision floating point scalar type, since the representation is accurate enough for sub-millimeter accuracy with lower memory footprint.

The following sample code shows how to initialize a transformation and apply it to a 3D point.

1#include <dv-processing/kinematics/transformation.hpp>

2

3#include <iostream>

4

5int main() {

6 Eigen::Matrix4f matrix;

7

8 // Mirror rotation matrix with 0.5 translational offsets on all axes. The rotation matrix should flip

9 // x and z axes of the input.

10 matrix << -1.f, 0.f, 0.f, 0.5f, 0.f, 1.f, 0.f, 0.5f, 0.f, 0.f, -1.f, 0.5f, 0.f, 0.f, 0.f, 1.f;

11

12 // Initialize the transformation with the above matrix. The timestamp can be ignored for this sample, so its set

13 // to zero.

14 const dv::kinematics::Transformationf transformation(0, matrix);

15

16 // Let's take a sample point with offsets of 1 on all axes.

17 const Eigen::Vector3f point(1.f, 1.f, 1.f);

18

19 // Apply this transformation to the above point. This should invert x and z axes and add 0.5 to all values.

20 const Eigen::Vector3f transformed = transformation.transformPoint(point);

21

22 // Print the resulting output.

23 std::cout << "Transformed from [" << point.transpose() << "] to [" << transformed.transpose() << "]" << std::endl;

24

25 return 0;

26}

1import dv_processing as dv

2import numpy as np

3

4# Mirror rotation matrix with 0.5 translational offsets on all axes. The rotation matrix should flip

5# x and z axes of the input.

6matrix = np.array([[-1.0, 0.0, 0.0, 0.5], [0.0, 1.0, 0.0, 0.5], [0.0, 0.0, -1.0, 0.5], [0.0, 0.0, 0.0, 1.]])

7

8# Initialize the transformation with the above matrix. The timestamp can be ignored for this sample, so its set

9# to zero.

10transformation = dv.kinematics.Transformationf(0, matrix)

11

12# Let's take a sample point with offsets of 1 on all axes.

13point = np.array([1.0, 1.0, 1.0])

14

15# Apply this transformation to the above point. This should invert x and z axes and add 0.5 to all values.

16transformed = transformation.transformPoint(point)

17

18# Print the resulting output.

19print(f"Transformed from {point} to {transformed}")

Linear transformer

A set of transformations that are monotonically increasing in time can be formed into a motion trajectory. Linear transformer can be used to store a set of transformation representing a single objects trajectory and extract transformations at specified points in time, which are calculated using linear interpolation between the nearest available transformations.

The following sample code shows how to use the dv::kinematics::LinearTransformerf class to interpolate

intermediate transformations in time:

1#include <dv-processing/kinematics/linear_transformer.hpp>

2#include <dv-processing/kinematics/transformation.hpp>

3

4#include <iostream>

5

6int main() {

7 // Declare linear transformer with capacity of 100 transformations. Internally it uses a bounded FIFO queue

8 // to manage the transformations.

9 dv::kinematics::LinearTransformerf transformer(100);

10

11 // Push first transformation which is an identity matrix, so it starts with no rotation at zero coordinates

12 transformer.pushTransformation(

13 dv::kinematics::Transformationf(1000000, Eigen::Vector3f(0.f, 0.f, 0.f), Eigen::Quaternionf::Identity()));

14

15 // Add a second transformation with no rotation as well, but with different translational coordinates

16 transformer.pushTransformation(

17 dv::kinematics::Transformationf(2000000, Eigen::Vector3f(1.f, 2.f, 3.f), Eigen::Quaternionf::Identity()));

18

19 // Interpolate transformation at a midpoint (time-wise), this should device the translational coordinates

20 // by a factor of 2.0

21 const auto midpoint = transformer.getTransformAt(1500000);

22

23 // Print the resulting output.

24 std::cout << "Interpolated position at [" << midpoint->getTimestamp() << "]: ["

25 << midpoint->getTranslation().transpose() << "]" << std::endl;

26

27 return 0;

28}

1import dv_processing as dv

2import numpy as np

3

4# Declare linear transformer with capacity of 100 transformations. Internally it uses a bounded FIFO queue

5# to manage the transformations.

6transformer = dv.kinematics.LinearTransformerf(100)

7

8# Push first transformation which is an identity matrix, so it starts with no rotation at zero coordinates

9transformer.pushTransformation(dv.kinematics.Transformationf(1000000, np.array([0.0, 0.0, 0.0]), (1.0, 0.0, 0.0, 0.0)))

10

11# Add a second transformation with no rotation as well, but with different translational coordinates

12transformer.pushTransformation(dv.kinematics.Transformationf(2000000, np.array([1.0, 2.0, 3.0]), (1.0, 0.0, 0.0, 0.0)))

13

14# Interpolate transformation at a midpoint (time-wise), this should device the translational coordinates

15# by a factor of 2.0

16midpoint = transformer.getTransformAt(1500000)

17

18# Print the resulting output.

19print(f"Interpolated position at [{midpoint.getTimestamp()}]: {midpoint.getTranslation()}")