Feature tracking

The dv-processing library provides a few algorithm implementations to perform visual tracking of detected features. Feature tracking was intended for use in the frontends of visual odometry pipelines. While tracking on event input is feasible, the library also provides frame-based and hybrid (which uses both events and frames) trackers that allow to build visual odometry pipelines that leverage both input modalities.

Frame-based tracking

Frame based feature tracking is performed by using Lucas-Kanade tracking algorithm. The following sample shows how to use the available frame based tracker with a stream of incoming frames.

The following code sample shows how to run a feature tracker on frames coming from a live camera.

Note

This sample requires a camera that is capable of producing frames, e.g. a DAVIS series camera.

1#include <dv-processing/features/feature_tracks.hpp>

2#include <dv-processing/features/image_feature_lk_tracker.hpp>

3#include <dv-processing/io/camera_capture.hpp>

4

5#include <opencv2/highgui.hpp>

6

7int main() {

8 // Open any camera

9 dv::io::CameraCapture capture;

10

11 // Make sure it supports event stream output, throw an error otherwise

12 if (!capture.isFrameStreamAvailable()) {

13 throw dv::exceptions::RuntimeError("Input camera does not provide a frame stream.");

14 }

15

16 const cv::Size resolution = capture.getFrameResolution().value();

17

18 // Initialize a preview window

19 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

20

21 // Instantiate a visual tracker with known resolution, all parameters kept default

22 auto tracker = dv::features::ImageFeatureLKTracker::RegularTracker(resolution);

23

24 // Create a track container instance that is used to visualize tracks on an image

25 dv::features::FeatureTracks tracks;

26

27 // Run the frame processing while the camera is connected

28 while (capture.isRunning()) {

29 // Try to receive a frame, check if anything was received

30 if (const auto frame = capture.getNextFrame()) {

31 // Pass the frame to the tracker

32 tracker->accept(*frame);

33

34 // Run tracking

35 const auto result = tracker->runTracking();

36

37 // Pass tracking result into the track container which aggregates track history

38 tracks.accept(result);

39

40 // Generate and show a preview of recent tracking history

41 cv::imshow("Preview", tracks.visualize(frame->image));

42 }

43 cv::waitKey(2);

44 }

45

46 return 0;

47}

1import dv_processing as dv

2import cv2 as cv

3

4# Open any camera

5capture = dv.io.CameraCapture()

6

7# Make sure it supports event stream output, throw an error otherwise

8if not capture.isFrameStreamAvailable():

9 raise RuntimeError("Input camera does not provide a frame stream.")

10

11# Initialize preview window

12cv.namedWindow("Preview", cv.WINDOW_NORMAL)

13

14# Instantiate a visual tracker with known resolution, all parameters kept default

15tracker = dv.features.ImageFeatureLKTracker.RegularTracker(capture.getEventResolution())

16

17# Create a track container instance that is used to visualize tracks on an image

18tracks = dv.features.FeatureTracks()

19

20# Run the frame processing while the camera is connected

21while capture.isRunning():

22 # Try to receive a frame

23 frame = capture.getNextFrame()

24

25 # Check if anything was received

26 if frame is not None:

27 # Pass the frame to the tracker

28 tracker.accept(frame)

29

30 # Run tracking

31 result = tracker.runTracking()

32

33 # Pass tracking result into the track container which aggregates track history

34 tracks.accept(result)

35

36 # Generate and show a preview of recent tracking history

37 cv.imshow("Preview", tracks.visualize(frame.image))

38

39 cv.waitKey(2)

Tracked features on a live frame from a camera.

Event-based tracking

Event-based Lucas Kanade tracker

Features can be detected and tracked on a stream of events. The dv::features::EventFeatureLKTracker can

perform this, it accumulates a frame from events internally, runs feature detection and performs Lucas-Kanade tracking

on the accumulated frames.

The following sample code shows how to use the event-only Lucas-Kanade tracker on event stream coming from a live camera.

1#include <dv-processing/features/event_feature_lk_tracker.hpp>

2#include <dv-processing/features/feature_tracks.hpp>

3#include <dv-processing/io/camera_capture.hpp>

4

5#include <opencv2/highgui.hpp>

6

7int main() {

8 // Open any camera

9 dv::io::CameraCapture capture;

10

11 // Make sure it supports event stream output, throw an error otherwise

12 if (!capture.isEventStreamAvailable()) {

13 throw dv::exceptions::RuntimeError("Input camera does not provide an event stream.");

14 }

15

16 const cv::Size resolution = capture.getEventResolution().value();

17

18 // Initialize a preview window

19 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

20

21 // Instantiate a visual tracker with known resolution, all parameters kept default

22 auto tracker = dv::features::EventFeatureLKTracker<>::RegularTracker(resolution);

23

24 // Run tracking by accumulating frames with 100 FPS

25 tracker->setFramerate(100);

26

27 // Create a track container instance that is used to visualize tracks on an image

28 dv::features::FeatureTracks tracks;

29

30 // Run the frame processing while the camera is connected

31 while (capture.isRunning()) {

32 // Try to receive a batch of events, check if anything was received

33 if (const auto events = capture.getNextEventBatch()) {

34 // Pass the frame to the tracker

35 tracker->accept(*events);

36

37 // Run tracking

38 const auto result = tracker->runTracking();

39

40 // Since we are passing events in fine-grained batches, tracking will not execute

41 // until enough events is received, returning invalid pointer if tracking did not execute

42 if (!result) {

43 continue;

44 }

45

46 // Pass tracking result into the track container which aggregates track history

47 tracks.accept(result);

48

49 // Generate and show a preview of recent tracking history

50 cv::imshow("Preview", tracks.visualize(tracker->getAccumulatedFrame()));

51 }

52 cv::waitKey(2);

53 }

54

55 return 0;

56}

1import dv_processing as dv

2import cv2 as cv

3

4# Open any camera

5capture = dv.io.CameraCapture()

6

7# Make sure it supports event stream output, throw an error otherwise

8if not capture.isEventStreamAvailable():

9 raise RuntimeError("Input camera does not provide an event stream.")

10

11# Initialize preview window

12cv.namedWindow("Preview", cv.WINDOW_NORMAL)

13

14# Instantiate a visual tracker with known resolution, all parameters kept default

15tracker = dv.features.EventFeatureLKTracker.RegularTracker(capture.getEventResolution())

16

17# Run tracking by accumulating frames with 100 FPS

18tracker.setFramerate(100)

19

20# Create a track container instance that is used to visualize tracks on an image

21tracks = dv.features.FeatureTracks()

22

23# Run the frame processing while the camera is connected

24while capture.isRunning():

25 # Try to receive a batch of events

26 events = capture.getNextEventBatch()

27

28 # Check if anything was received

29 if events is not None:

30 # Pass the events to the tracker

31 tracker.accept(events)

32

33 # Run tracking

34 result = tracker.runTracking()

35

36 # Since we are passing events in fine-grained batches, tracking will not execute

37 # until enough events is received, returning a `None` if tracking did not execute

38 if result is None:

39 continue

40

41 # Pass tracking result into the track container which aggregates track history

42 tracks.accept(result)

43

44 # Generate and show a preview of recent tracking history

45 cv.imshow("Preview", tracks.visualize(tracker.getAccumulatedFrame()))

46

47 cv.waitKey(2)

Tracked features on a stream of events from a camera.

Event-based mean shift tracker

Detect and track features on a stream of events using mean shift algorithm. Although commonly used for clustering, the

dv::features::MeanShiftTracker class provides a tracking implementation on event data based on mean shift

update. The class internally detects interesting features to track from events (by default it uses

dv::features::EventBlobDetector) and tracks them by running a mean shift update on a normalized time

surface of events. The tracking is performed by following the interesting points detected on the time surface. The

algorithm will shift the tracks towards the latest events, since it takes into account the intensity of the time surface

when performing the track location update.

The algorithm can be summarized as follows:

Given a set of events, detect interesting blobs using

dv::features::EventBlobDetector. (Note, this step happens if no track has been initialized or if redetection is enabled)Compute the time surface representation of a given interval duration.

Given a set of input track locations, for each non-converged track retrieve the time surface of events within a configured window.

Calculate the mean of coordinates for the retrieved neighborhood, weighting each coordinate by the time surface intensity value.

Shift the initial track location by a mode, which is a vector going from the initial point to the mean coordinate multiplied by a learning rate factor.

If the mode of a vector is lower than a configured threshold, the track is considered to have converged into the new position, otherwise repeat from step one.

This algorithm is useful to track event blobs that could be used as point of interest in event processing algorithms.

The following code sample shows the use of our mean-shift tracker implementation to find and track events on sample data generated synthetically.

1#include <dv-processing/core/event.hpp>

2#include <dv-processing/data/generate.hpp>

3#include <dv-processing/features/mean_shift_tracker.hpp>

4#include <dv-processing/visualization/events_visualizer.hpp>

5

6#include <opencv2/highgui.hpp>

7#include <opencv2/imgproc.hpp>

8

9[[nodiscard]] dv::EventStore generateEventClustersAtTime(const int64_t time, const std::vector<dv::Point2f> &clusters,

10 const uint64_t numIter, const cv::Size &resolution, const int shift = -5);

11

12int main() {

13 using namespace std::chrono_literals;

14

15 // Use VGA resolution

16 const cv::Size resolution(640, 480);

17

18 // Initialize a slicer

19 dv::EventStreamSlicer slicer;

20

21 // Initialize a preview window

22 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

23

24 // Initialize a list of clusters for synthetic data generation

25 const std::vector<dv::Point2f> clusters(

26 {dv::Point2f(550.f, 400.f), dv::Point2f(70.f, 300.f), dv::Point2f(305.f, 100.f)});

27

28 // Generate some random events for a background

29 dv::EventStore events = dv::data::generate::uniformlyDistributedEvents(0, resolution, 10'000);

30

31 std::vector<int64_t> timestamps = {0, 40000, 80000, 120000, 160000, 200000, 240000, 280000, 320000, 360000};

32

33 uint64_t numIter = 0;

34 for (const auto time : timestamps) {

35 auto eventCluster = generateEventClustersAtTime(time, clusters, numIter, resolution);

36 events += eventCluster;

37 events += dv::data::generate::uniformlyDistributedEvents(time, resolution, 10000, numIter);

38 numIter++;

39 }

40

41 // Bandwidth value defining the size of the search window in which updated track location will be searched

42 const int bandwidth = 10;

43

44 // Time window used for the normalized time surface computation. In this case we take the last 50ms of events and

45 // compute a normalized time surface over them

46 const dv::Duration timeWindow = 50ms;

47

48 // Initialize a mean shift tracker.

49 dv::features::MeanShiftTracker meanShift = dv::features::MeanShiftTracker(resolution, bandwidth, timeWindow);

50

51 dv::visualization::EventVisualizer visualizer(resolution);

52

53 // Register a callback every 40 milliseconds

54 slicer.doEveryTimeInterval(40ms, [&](const dv::EventStore &events) {

55 meanShift.accept(events);

56 auto meanShiftTracks = meanShift.runTracking();

57

58 if (!meanShiftTracks) {

59 return;

60 }

61

62 // visualize mean shift tracks

63 auto preview = visualizer.generateImage(events);

64 auto points = dv::data::fromTimedKeyPoints(meanShiftTracks->keypoints);

65 cv::drawKeypoints(preview, points, preview, dv::visualization::colors::red);

66

67 cv::imshow("Preview", preview);

68 cv::waitKey(300);

69 });

70

71 slicer.accept(events);

72

73 return EXIT_SUCCESS;

74}

75

76dv::EventStore generateEventClustersAtTime(const int64_t time, const std::vector<dv::Point2f> &clusters,

77 const uint64_t numIter, const cv::Size &resolution, const int shift) {

78 // Declare a region filter which we will use to filter out-of-bounds events in the next step

79 dv::EventRegionFilter filter(cv::Rect(0, 0, resolution.width, resolution.height));

80 const float offset = static_cast<float>(shift * static_cast<int>(numIter));

81 dv::EventStore eventFiltered;

82 for (const auto &cluster : clusters) {

83 const auto xShift = cluster.x() + offset;

84 const auto yShift = cluster.y() + offset;

85 const dv::Point2f point = dv::Point2f(xShift, yShift);

86 // Generate a batch of normally distributed events around each of the cluster centers

87 filter.accept(dv::data::generate::normallyDistributedEvents(time, point, dv::Point2f(3.f, 3.f), 1'000));

88

89 // Apply region filter to the events to filter out events outside valid dimensions

90 eventFiltered += filter.generateEvents();

91 }

92

93 return eventFiltered;

94}

1import datetime

2

3import dv_processing as dv

4import cv2 as cv

5

6

7def generate_event_clusters_at_time(time, clusters, num_iter, shift=-5):

8 # Declare a region filter which we will use to filter out-of-bounds events in the next step

9 event_filter = dv.EventRegionFilter((0, 0, resolution[0], resolution[1]))

10 event_filtered = dv.EventStore()

11 track_id = 0

12 offset = shift * num_iter

13 for cluster in clusters:

14 x_coord = cluster[0] + offset

15 y_coord = cluster[1] + offset

16

17 # Generate a batch of normally distributed events around each of the cluster centers

18 event_filter.accept(dv.data.generate.normallyDistributedEvents(time, (x_coord, y_coord), (3, 3), 1000))

19

20 # Apply region filter to the events to filter out events outside valid dimensions

21 event_filtered.add(event_filter.generateEvents())

22

23 track_id += 1

24

25 return event_filtered

26

27

28def run_mean_shift(events):

29 mean_shift.accept(events)

30 mean_shift_tracks = mean_shift.runTracking()

31

32 preview = visualizer.generateImage(events)

33

34 # Draw markers on each of the track coordinates

35 if len(mean_shift_tracks.keypoints) > 0:

36 for index in range(len(mean_shift_tracks.keypoints)):

37 track = mean_shift_tracks.keypoints[index]

38 cv.drawMarker(preview, (int(track.pt[0]), int(track.pt[1])), dv.visualization.colors.red(), cv.MARKER_CROSS,

39 20, 2)

40

41 # Show the preview image with detected tracks

42 cv.imshow("Preview", preview)

43 cv.waitKey(10)

44

45

46# Use VGA resolution

47resolution = (640, 480)

48

49# Initialize a slicer

50slicer = dv.EventStreamSlicer()

51

52# Initialize a preview window

53cv.namedWindow("Preview", cv.WINDOW_NORMAL)

54

55# Initialize a list of clusters for synthetic data generation

56clusters = [(550, 400), (70, 300), (305, 100)]

57

58# Generate some random events for a background

59events = dv.data.generate.uniformlyDistributedEvents(0, resolution, 10000)

60

61timestamps = [0, 40000, 80000, 120000, 160000, 200000, 240000, 280000, 320000, 360000]

62

63num_iter = 0

64for time in timestamps:

65 event_cluster = generate_event_clusters_at_time(time, clusters, num_iter)

66 events.add(event_cluster)

67 events.add(dv.data.generate.uniformlyDistributedEvents(time, resolution, 10000, num_iter))

68 num_iter += 1

69

70# parameter defining the spatial window [pixels] in which the new track position will be searched

71bandwidth = 10

72

73# window of time used to compute the time surface used for the tracking update

74time_window = datetime.timedelta(milliseconds=50)

75

76# Initialize a mean shift tracker

77mean_shift = dv.features.MeanShiftTracker(resolution, bandwidth, time_window)

78

79visualizer = dv.visualization.EventVisualizer(resolution)

80

81slicer.doEveryTimeInterval(datetime.timedelta(milliseconds=40), run_mean_shift)

82

83slicer.accept(events)

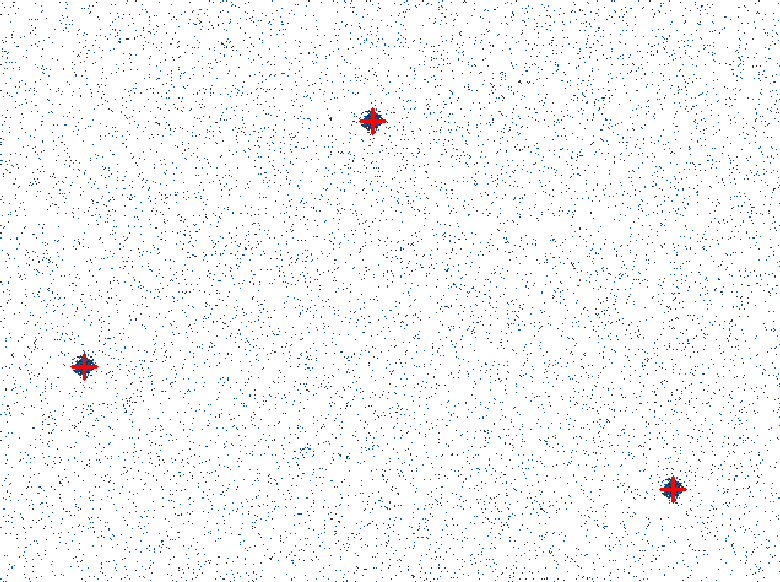

Expected output of the mean-shift-tracker sample usage. Tracking eight blobs marked with red crosses.

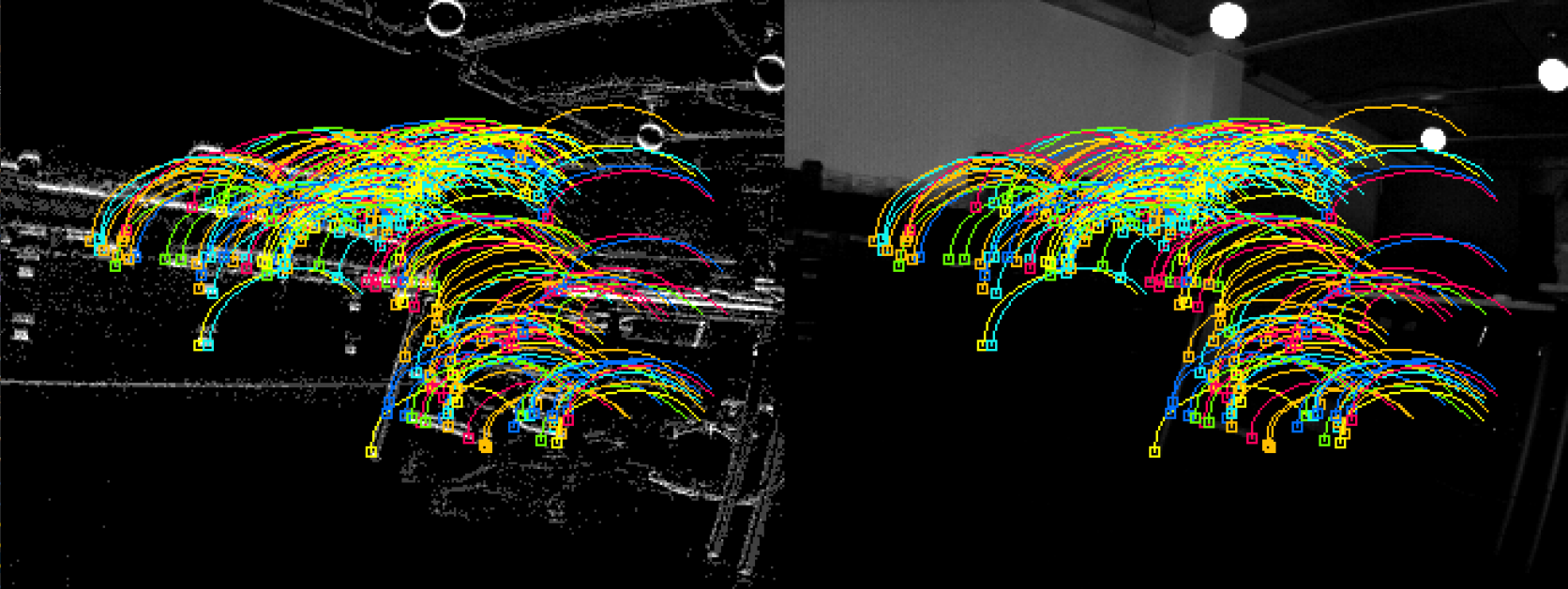

Hybrid tracking

The high-framerate tracking on event stream suggests that the feature tracking on frames can be improved by tracking

features between frames on intermediate accumulated frames from events. The intermediate tracking results can be used as

a prior to the frame tracking algorithm. Such an approach is implemented in

dv::features::EventCombinedLKTracker, it performs regular Lucas-Kanade tracking on frames, but also

constructs intermediate accumulated frames to predict the locations of tracks in the next frame and uses this

information as a prior to the Lucas-Kanade tracking algorithm.

The following sample code shows how to use the hybrid event-frame Lucas-Kanade tracker on both streams coming from a live camera.

Note

This sample requires a camera that is capable of producing frames and events, e.g. a DAVIS series camera.

1#include <dv-processing/features/event_combined_lk_tracker.hpp>

2#include <dv-processing/features/feature_tracks.hpp>

3#include <dv-processing/io/camera_capture.hpp>

4

5#include <opencv2/highgui.hpp>

6

7int main() {

8 // Open any camera

9 dv::io::CameraCapture capture;

10

11 // Make sure it supports correct stream outputs, throw an error otherwise

12 if (!capture.isEventStreamAvailable()) {

13 throw dv::exceptions::RuntimeError("Input camera does not provide an event stream.");

14 }

15 if (!capture.isFrameStreamAvailable()) {

16 throw dv::exceptions::RuntimeError("Input camera does not provide a frame stream.");

17 }

18

19 const cv::Size resolution = capture.getEventResolution().value();

20

21 // Initialize a preview window

22 cv::namedWindow("Preview", cv::WINDOW_NORMAL);

23

24 // Instantiate a visual tracker with known resolution, all parameters kept default

25 auto tracker = dv::features::EventCombinedLKTracker<>::RegularTracker(resolution);

26

27 // Accumulate and track on 5 intermediate accumulated frames between each actual frame pair

28 tracker->setNumIntermediateFrames(5);

29

30 // Create a track container instance that is used to visualize tracks on an image

31 dv::features::FeatureTracks tracks;

32

33 // Use a queue to store incoming frames to make sure the all data has arrived prior to running the tracking

34 std::queue<dv::Frame> frameQueue;

35

36 // Run the frame processing while the camera is connected

37 while (capture.isRunning()) {

38 // Try to receive a frame, check if anything was received

39 if (const auto frame = capture.getNextFrame()) {

40 // Push the received frame into the frame queue

41 frameQueue.push(*frame);

42 }

43

44 // Try to receive a batch of events, check if anything was received

45 if (const auto events = capture.getNextEventBatch()) {

46 // Pass the frame to the tracker

47 tracker->accept(*events);

48

49 // Check if we have ready frames and if enough events have arrived already

50 if (frameQueue.empty() || frameQueue.front().timestamp > events->getHighestTime()) {

51 continue;

52 }

53

54 // Take the last frame from the queue

55 const auto frame = frameQueue.front();

56

57 // Pass it to the tracker as well

58 tracker->accept(frame);

59

60 // Remove the last used frame from the queue

61 frameQueue.pop();

62

63 // Run tracking

64 const auto result = tracker->runTracking();

65

66 // Validate that the tracking was successful

67 if (!result) {

68 continue;

69 }

70

71 // Pass tracking result into the track container which aggregates track history

72 tracks.accept(result);

73

74 // Generate and show a preview of recent tracking history on both accumulated frames and the frame image

75 // Take the set of intermediate accumulated frames from the tracker

76 const auto accumulatedFrames = tracker->getAccumulatedFrames();

77 if (!accumulatedFrames.empty()) {

78 cv::Mat preview;

79 // Draw visualization on both image and concatenate them horizontally

80 cv::hconcat(

81 tracks.visualize(accumulatedFrames.back().pyramid.front()), tracks.visualize(frame.image), preview);

82 // Show the final preview image

83 cv::imshow("Preview", preview);

84 }

85 }

86

87 cv::waitKey(2);

88 }

89

90 return 0;

91}

1import dv_processing as dv

2import cv2 as cv

3from datetime import timedelta

4

5# Open any camera

6capture = dv.io.CameraCapture()

7

8# Make sure it supports correct stream outputs, throw an error otherwise

9if not capture.isEventStreamAvailable():

10 raise RuntimeError("Input camera does not provide an event stream.")

11if not capture.isEventStreamAvailable():

12 raise RuntimeError("Input camera does not provide a frame stream.")

13

14# Initialize preview window

15cv.namedWindow("Preview", cv.WINDOW_NORMAL)

16

17# Instantiate a visual tracker with known resolution, all parameters kept default

18tracker = dv.features.EventCombinedLKTracker.RegularTracker(capture.getEventResolution())

19

20# Accumulate and track on 5 intermediate accumulated frames between each actual frame pair

21tracker.setNumIntermediateFrames(5)

22

23# Create a track container instance that is used to visualize tracks on an image

24tracks = dv.features.FeatureTracks()

25

26# Use a list to store incoming frames to make sure the all data has arrived prior to running the tracking

27frame_queue = []

28

29# Run the frame processing while the camera is connected

30while capture.isRunning():

31 # Try to receive a frame

32 frame = capture.getNextFrame()

33

34 # Check if anything was received

35 if frame is not None:

36 frame_queue.append(frame)

37

38 # Try to receive a batch of events

39 events = capture.getNextEventBatch()

40

41 # Check if anything was received

42 if events is not None:

43 # Pass the events to the tracker

44 tracker.accept(events)

45

46 # Check if we have ready frames and if enough events have arrived already

47 if len(frame_queue) == 0 or frame_queue[0].timestamp > events.getHighestTime():

48 continue

49

50 # Take the last frame from the queue and remove it

51 frame = frame_queue.pop(0)

52

53 # Pass it to the tracker as well

54 tracker.accept(frame)

55

56 # Run tracking

57 result = tracker.runTracking()

58

59 # Validate that the tracking was successful

60 if result is None:

61 continue

62

63 # Pass tracking result into the track container which aggregates track history

64 tracks.accept(result)

65

66 # Generate and show a preview of recent tracking history on both accumulated frames and the frame image

67 # Take the set of intermediate accumulated frames from the tracker

68 accumulated_frames = tracker.getAccumulatedFrames()

69 if len(accumulated_frames) > 0:

70 # Draw visualization on both image and concatenate them horizontally

71 preview = cv.hconcat([tracks.visualize(accumulated_frames[-1].pyramid[0]), tracks.visualize(frame.image)])

72

73 # Show the final preview image

74 cv.imshow("Preview", preview)

75

76 cv.waitKey(2)

Tracked features on frame and event streams from a camera.